Lauren's Crib

I built Lauren’s Crib, an experimental AI chatbot that serves as an empathetic relationship advisor using the unfiltered data of our deepest thoughts, a chat messages we share with loved ones

2025

—Laurensius Rivian Pratama - Solo Developer

Roles

My Role

- - Led concept development and overall interaction flow for the experience

- - Designed the analysis and feedback schema for relationship insights

- - Built and refined the prompt system that drives the two AI personas

- - Developed the interface and basic backend to handle uploads and responses

- - Ran informal pilot testing and translated user reactions into design changes

Theoretical Lens

- Algorithmic ethics

- Somatic design

- Relationship psychology

Methodology

- Experiential prototyping

- Prompt engineering

- Qualitative feedback

Background

After Sitting with Jakarta I wanted to go closer to the people’s minds. The chair project already turned a piece of furniture into a kind of witness that reflects a person back in a strange way, yet it still stayed at the level of symbols and archetypes. I started to wonder what would happen if the “material” was not a chair in a story but traces of a real relationship. I became curious whether an AI system could read patterns inside everyday interactions and mirror them back in a way that feels painfully honest, almost like a late night conversation with a friend who knows you too well.

Lauren’s Crib is my attempt to explore AI as an empathetic presence that sits between people and reflects their bond, while I also wanted the experience to feel slightly unsafe so the ethical line stays visible. The project tests how far an AI can go when it has access to more personal data and tries to respond with warmth. Building it turned into a way to study where comfort turns into exposure, how much people are willing to share with a machine that seems to understand them, and how design choices shape the balance between care, consent, and surveillance.

In the middle of algorithmic social media, I sensed that AI already reads our behaviour and desires (surprisingly) very well, so I began to ask how we could use the power of AI in a conscious way, instead of letting it quietly use us.

Overview

It's all started when two close friends of mine, who were a couple, went through a heavy fight and both began venting to me from opposite sides of the story. Each of them sounded right in their own way and I could not be judgemental and being fair without the full context of their conversations. From previous experiments with AI I already saw how strong large models are at summarising long, messy text, so I began to look at chat history as the most intimate and honest record of a relationship, much clearer than what people say when they are emotional.

What does it do

Intention

I wanted to build an AI presence that can sit in the middle of a relationship with the warmth of a close friend and the distance of a careful observer. I want an entity that does not take sides, that reads the full conversation history and reflects back patterns that people often miss when they are hurt or defensive. It should highlight small acts of care that go unnoticed, point out repeated habits that cause harm, and remind people where their values overlap.

I see it as an experiment in empathetic AI where language models try to respond with kindness, clarity, and grounded insight while still being honest about power imbalance, emotional labour, and unspoken resentment inside the chat logs.

Data Extraction and Consent

It works with one of the most sensitive kinds of data in daily life, private chat logs between person. Users must export and upload their own logs through clear steps and I ask them to share only conversations they own and to anonymise names where possible. The disclaimer states that responsibility for what gets uploaded stays with the user and that they should move carefully when a third person appears in the chats. Each screen reminds them that chat analysis is an experiment and not a medical tool or a replacement for therapy, counselling, or direct conversation with a partner. I should keep the users aware of the privacy concern at every step so people stay aware that they are sharing their very intimate space to AI (which owned by the inference company), which they should decide for themselves where their ethical limits sit when they hand their conversations to a machine.

Pilot Testing

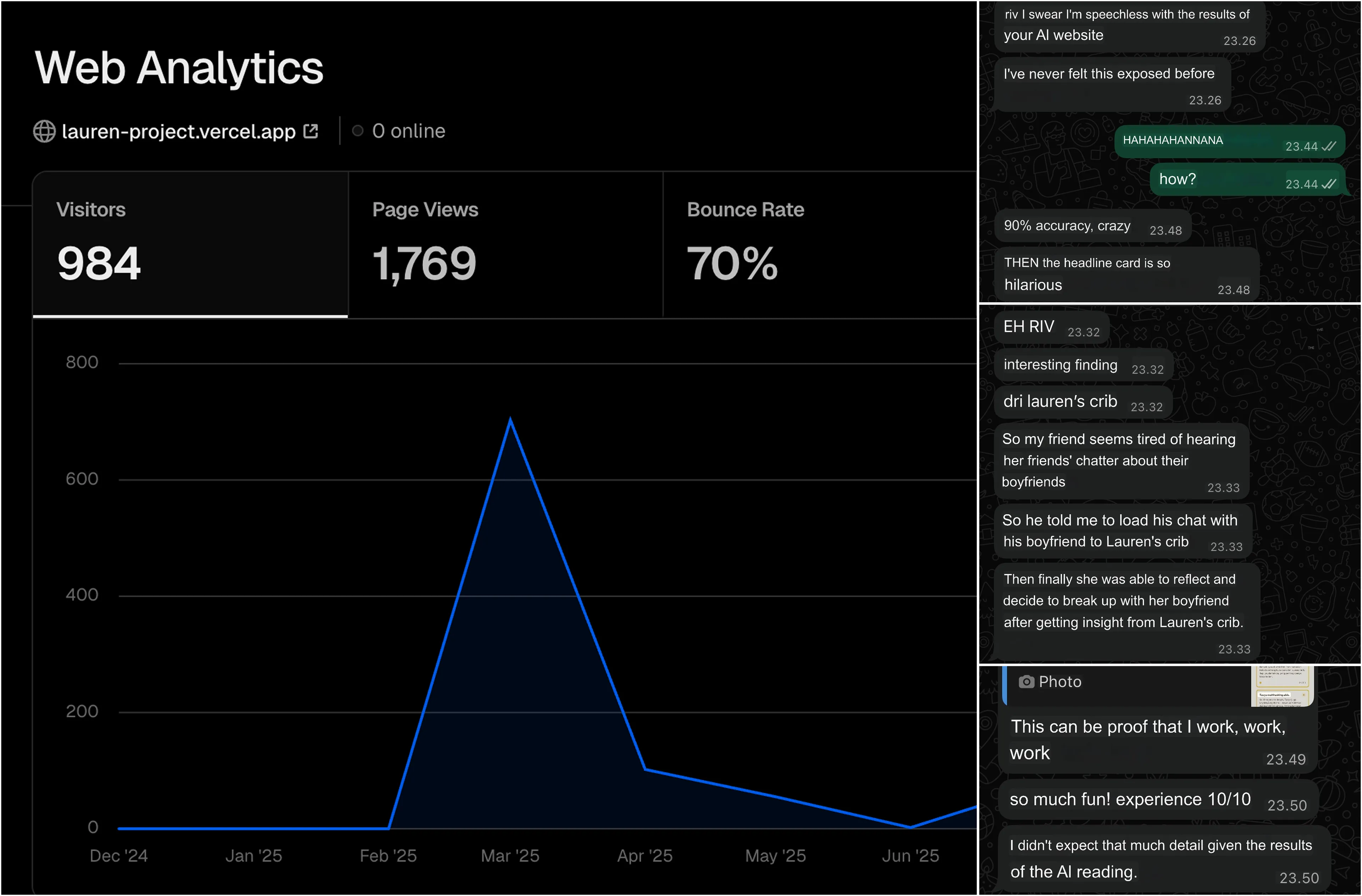

During an informal pilot test I shared Lauren’s Crib with friends through my Instagram story and asked them to try it with their real chat logs.

A lot of my friends described the responses as uncanny, they capable of understand their habits and emotional blind spots in a way they usually expect from a close friend.

Even one of my friend who had been stuck in a long breakup process said the interaction with the app helped them finally decide to end the relationship.

But at the same time a lot of people refused to upload their chats even after reading the disclaimer, since the idea of handing such private messages to an AI felt too risky.

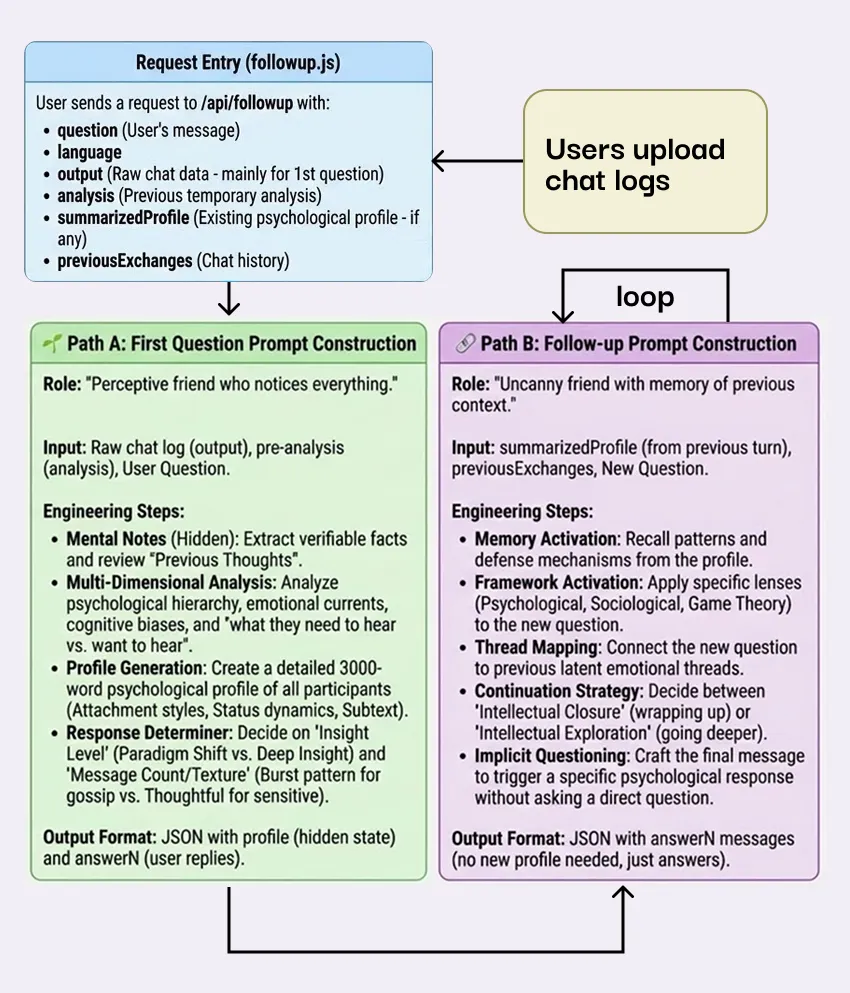

Technical Aspects

Prompt Engineering

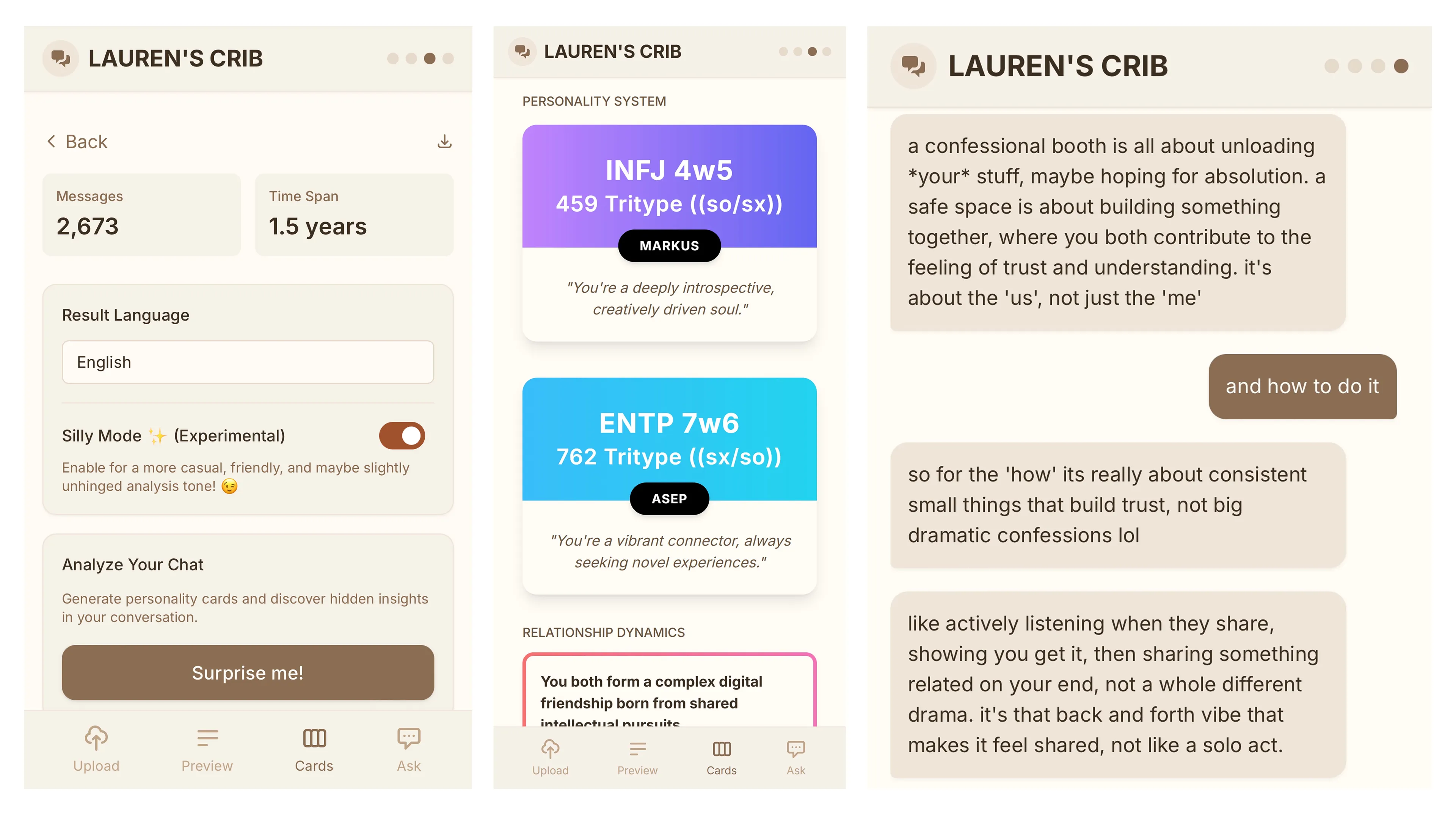

I used multiple layer of prompting techniques to reach that level of warmth and precision.

First, I let the model to imagine that they're a world class psychologist persona with a slightly desperate backstory, which puts the model into a very analytic and unsentimental mindset. Into that prompt I poured a long block of hand picked theory about MBTI cognitive functions, Enneagram patterns and basic behavioural psychology.

It scans for patterns in word choice, timing, power balance and recurring conflicts and then builds a structured psychological profile before it ever speaks to the user.

Second, I let the model to imagine that they're more like a perceptive friend from the group chat. The prompt tells the model to think in two stages.

It writes long internal notes that the user never sees, where it debates possible interpretations and weighs the evidence from the chat. After that it compresses those notes into short, casual answers that sound human and grounded instead of clinical.

With these techniques, the AI feel strangely present and consistent, even though it runs on top of the same models everyone else uses.

Data Presentation

Lauren’s Crib shows the analysis as a series of simple panels so people can read heavy psychological content like they scroll a friend’s recap.

A. Relationship snapshot

The first card gives a score on a zero to ten scale, one key word for the whole relationship, a sharp title that sounds like a blunt verdict from a friend, and a short explanation that sums up what is happening between the two people in plain language.

B. Personality and dynamics map

The next section shows MBTI, Enneagram, tritype, and instinct stack for each person, then adds a one sentence archetype line. It explains how each person speaks, how they handle conflict, who leads, who withdraws, and how their personalities lock together in daily conversation.

C. Moments, patterns, and flags

Another block lists the main topics, the strongest highs and lows in the chat history, and marks clear red flags and green flags. The goal is to show where the relationship is fragile and where it is quietly strong, so users see a pattern over time.

D. Playful hooks and follow up questions

The final layer adds short “interesting facts” about the chat, one provocative question where the AI picks a side in a slightly chaotic way, and a set of deeper questions that users can think about or discuss together.

What did I learn?

AI can be more objective than friends?

My findings showed that people are willing to trust an AI summary of their relationship more than the opinions of someone close to them. The combination of cold pattern analysis and warm chat tone created a sense of being deeply understood that many users said they rarely get from humans. That response points to a new space for empathetic tools that sit between therapist, friend, and mirror, and it also raises questions about how much power such systems hold over real life choices.

Framing of AI as an empathetic figure?

The project proved that prompt design and persona choices change the behavior of the AI. A playful, low pressure texting style made objective rationale easier to digest, which is useful for reflection and at the same time makes it easy to forget that this is an automated system. Any future research on empathetic AI needs to consider tone, humour, and wording as part of the ethical concerns, since they control how persuasive the system becomes during vulnerable moments.

Ethical risk of AI need to be studied...

Friends were flabbergasted by how sharp and human-like the feedback felt, yet many still hesitated to upload full chat histories because they saw them as too private and risky to share. We need clear research direction around consent flows, on device processing, and narrow data windows that give people comfort without draining the emotional power of the tool. The most interesting opportunity sits exactly in this gap, where people want deeper support but still want to protect the parts of their lives that should stay unwatched.

What's next

Building Lauren’s Crib showed me how easily a careful prompt and a pile of chat logs can turn into something that shapes how people see themselves and their partners. The next step is to slow down and ask harder questions about who should create systems like this, who they serve, and what kinds of emotional dependence they quietly invite.

We need to stay critical about how we use technology, what kinds of intimacy we outsource to it, and what that means for human judgment, responsibility, and the way we live together.